Summary: Spimes are a wonderful intellectual framework for thinking about the Internet of Things. This blog post shows how spimes can be created using picos and then applied to a sensor platform called ESProto.

Connected things need a platform to accomplish much more than sending data. Picos make an ideal system for creating the backend for connected devices. This post shows how I did that for the ESProto sensor system and talks about the work my lab is currently doing to make that easier than ever.

ESProto

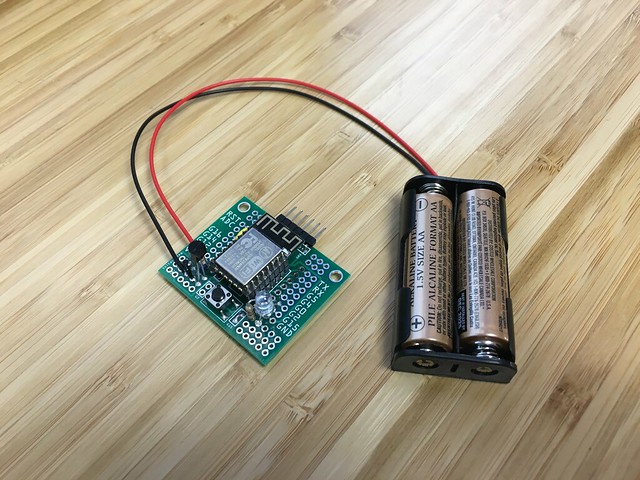

ESProto is a collection of sensor platforms based on the ESP8266, an Arduino-based chip with a built-in WiFi module. My friend Scott Lemon is the inventor of ESProto. Scott gave me a couple of early prototypes to play with: a simple temperature sensor and a multi-sensor array (MSA) that includes two temperature transducers (one on a probe), a pressure transducer, and a humidity transducer.

One of the things I love about Scott's design is that the sensors aren't hardwired to a specific platform. As part of setting up a sensor unit, you provide a URL to which the sensor will periodically POST (via HTTP) a standard payload of data. In stark contrast to most of the Internet of Things products we see on the market, ESProto let's you decide where the data goes.1

Setting up an ESProto sensor device follows the standard methodology for connecting something without a user interface to a WiFi network: (1) put the device in access point mode, (2) connect to it from your phone or laptop, (3) fill out a configuration screen, and (4) reboot. The only difference with the ESProto is that in addition to the WiFi configuration, you enter the data POST URL.

Once configured, the ESProto periodically wakes, makes it's readings, POSTs the data payload, and then goes back to sleep. The sleep period can be adjusted, but is nominally 10 minutes.

The ESProto design can support devices with many different types of transducers in myriad configurations. Scott anticipates that they will be used primarily in commercial settings.

Spimes and Picos

A spime is a computational object that can track the meta data about a physical object or concept through space and time. Bruce Sterling coined the neologism as a contraction of space and time. Spimes can contain profile information about an object, provenance data, design information, historical data, and so on. Spimes provide an excellent conceptual model for the Internet of Things.

Picos are persistent compute objects. Picos run in the pico engine and provide an actor-model for distributed programming. Picos are always on; they are continually listening for events on HTTP-based event channels. Because picos have individual identity, persistent state, customizable programming, and APIs that arise from their programming, picos make a great platform for implementing spimes.2

Because picos are always online, they are reactive. When used to create spimes, they don't simply hold meta-data as passive repositories, but rather can be active participants in the Internet of Things. While they are cloud-based, picos don't have to run in a single pico engine to work together. Picos employ a hosting model that allows them to be run on different pico engines and to be moved between them.

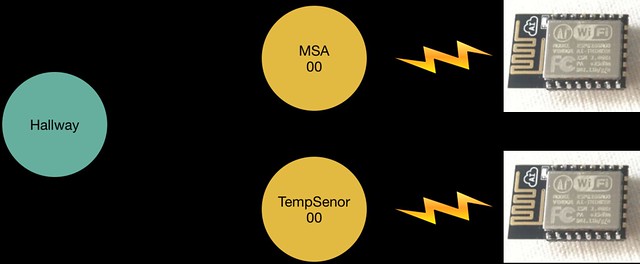

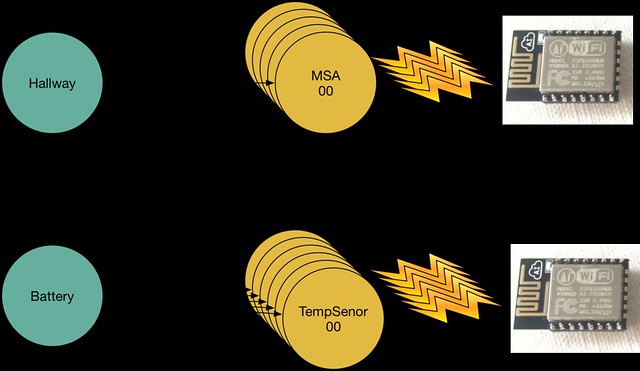

Spimes can also represent concepts. For organizing devices we not only represent the device itself with a pico-based spime, but also collections of spimes that have some meaning. For example, we might have two spimes, representing a multi-sensor array and a temperature sensor installed in a hallway and want to create a collection of sensors in the hallway. This spime stores and processes meta-data for the hallway, ioncluding processing and aggregating data readings from the various sensors in the hallway.

Spimes can belong to more than one collection. The same two sensors that are part of the hallway collection might also, for example, be part of a battery collection that is collecting low battery notifications and is used by maintenance to ensure the batteries are always replaced. The battery collection could also be used to control the battery conservation strategies of various sensor types. For example, sensors could increase their sleep time after they drop below a given battery level.

Spimes and ESProto

Pico-based spimes provide an excellent platform for making use of ESProto and other connected devices. We can create a one-to-one mapping between ESProto devices and a spime that represents them. This has several advantages:

- things don't need to be very smart—a low-power Arduino-based processor is sufficient for powering the ESProto, but it cannot keep up with the computing needs of a large, flexible collection of sensors without a significant increase in cost. Using spimes, we can keep the processing needs of the devices light, and thus inexpensive, without sacrificing processing and storage requirements.

- things can be low power—the ESProto device is designed to run on battery power and thus needs to be very low power. This implies that they can't be always available to answer queries or respond to events. Having a virtual, cloud-based persona for the device enables the system to treat the devices as if they are always on, caching readings from the device and instructions for the device.

- things can be loosely coupled—the actor-model of distributed computing used by picos supports loosely coupled collections of things working together while maintaining their independence through isolation of both state and processing.

- each device and each collection get its own identity—there is intellectual leverage in closely mapping the computation domain to the physical domain3. We also gain tremendous programming flexibility in creating an independent spime for each device and collection.

Each pico-based spime can present multiple URL-based channels that other actors can use. In the case of ESProto devices, we create a specific channel for the transducer to POST to. The device is tied, by the URL, to the specific spime that represents it.

Using ESProto with Picos

My lab is creating a general, pico-based spime framework. ESProto presents an excellent opportunity to design the new spime framework.

I used manually configured picos to explore how the spime framework should function. To do this, I used our developer tool to create and configure, by hand, a pico for each individual sensor I own and put them in a collection.

I also created some initial rulesets for the ESProto devices and for a simple collection. The goal of these rulesets is to test readings from the ESProto device against a set of saved thresholds and notify the collection whenever there's a threshold violation. The collection merely logs the violation for inspection.

The Device Pico

I created two rulesets for the device pico: esproto_router.krl and esproto_device.krl. The router is primarily concerned with getting the raw data dump from the ESProto sensor and making sense of it using the semantic translation pattern. For example, the following rule, check_battery, looks at the ESProto data and determines whether or not the battery level is low. If it is, then the rule raises the battery_level_low event:

rule check_battery {

select when wovynEmitter thingHeartbeat

pre {

sensor_data = sensorData();

sensor_id = event:attr("emitterGUID");

sensor_properties = event:attr("property");

}

if (sensor_data{"healthPercent"}) < healthy_battery_level

then noop()

fired {

log "Battery is low";

raise esproto event "battery_level_low"

with sensor_id = sensor_id

and properties = sensor_properties

and health_percent = sensor_data{"healthPercent"}

and timestamp = time:now();

} else {

log "Battery is fine";

}

}

The resulting event, battery_level_low, is much more meaningful and precise than the large data dump that the sensor provides. Other rules, in this or other rulesets, can listen for the battery_level_low event and respond appropriately.

Another rule, route_readings, also provides a semantic translation of the ESProto data for each sensor reading. This rule is more general than the check_battery rule, raising the appropriate event for any sensor that is installed in the ESProto device.

rule route_readings {

select when wovynEmitter thingHeartbeat

foreach sensorData(["data"]) setting (sensor_type, sensor_readings)

pre {

event_name = "new_" + sensor_type + "_reading".klog("Event ");

}

always {

raise esproto event event_name attributes

{"readings": sensor_readings,

"sensor_id": event:attr("emitterGUID"),

"timestamp": time:now()

};

}

}

This rule constructs the event from the sensor type in the sensor data and will thus adapt to different sensors without modification. In the case of the MSA, this would raise a new_temperature_reading, a new_pressure_reading, and a new_humidity_reading from the sensor heartbeat. Again, other interested rules could respond to these as appropriate.

The esproto_device ruleset provides the means of setting thresholds and checks events from the route_readings rule shown above for threshold violations. The check_threshold rule listens for the events raised by the route_readings rule:

rule check_threshold {

select when esproto new_temperature_reading

or esproto new_humidity_reading

or esproto new_pressure_reading

foreach event:attr("readings") setting (reading)

pre {

event_type = event:type().klog("Event type: ");

// thresholds

threshold_type = event_map{event_type};

threshold_map = thresholds(threshold_type);

lower_threshold = threshold_map{["limits","lower"]};

upper_threshold = threshold_map{["limits","upper"]};

// sensor readings

data = reading.klog("Reading from #{threshold_type}: ");

reading_value = data{reading_map{threshold_type}};

sensor_name = data{"name"};

// decide

under = reading_value < lower_threshold;

over = upper_threshold < reading_value;

msg = under => "#{threshold_type} is under threshold: #{lower_threshold}"

| over => "#{threshold_type} is over threshold: #{upper_threshold}"

| "";

}

if( under || over ) then noop();

fired {

raise esproto event "threshold_violation" attributes

{"reading": reading.encode(),

"threshold": under => lower_threshold | upper_threshold,

"message": "threshold violation: #{msg} for #{sensor_name}"

}

}

}

The rule is made more complex by its generality. An given sensor can have multiple readings of a given type (e.g. the MSA shown in the picture at the top of this post contains two temperature sensors), so a foreach is used to check each reading for a threshold violation. The rule also constructs an appropriate message to deliver with the violation, if one occurs. The rule conditional checks if the threshold violation has occurred, and if it has the rule raises the threshold_violation event.

In addition to rules inside the device pico that might care about a threshold violation, the esproto_device ruleset also contains a rule dedicated to routing certain events to the collections that the device belongs to. The route_to_collections rule routes all threshold_violation and battery_level_low events to any collection to which the device belongs.

rule route_to_collections {

select when esproto threshold_violation

or esproto battery_level_low

foreach collectionSubscriptions() setting (sub_name, sub_value)

pre {

eci = sub_value{"event_eci"};

}

event:send({"cid": eci}, "esproto", event:type())

with attrs = event:attrs();

}

Again, this rule makes use of a foreach to loop over the collection subscriptions and send the event upon which the rule selected to the collection.

This is a fairly simple routing rule that just routes all interesting events to all the device's collections. A more sophisticated router could use attributes on the subscriptions to pick what events to route to which collections.

The Collection Pico

At present, the collection pico runs a simple rule, log_violation, that merely logs the violation. Whenever it sees a threshold_violation event, it formats the readings and messages and adds a timestamp4:

rule log_violation {

select when esproto threshold_violation

pre {

readings = event:attr("reading").decode();

timestamp = time:now(); // should come from device

new_log = ent:violation_log

.put([timestamp], {"reading": readings,

"message": event:attr("message")})

.klog("New log ");

}

always {

set ent:violation_log new_log

}

}

A request to see the violations results in a JSON structure like the following:

{"2016-05-02T19:03:36Z":

{"reading": {

"temperatureC": "26",

"name": "probe temp",

"transducerGUID": "5CCF7F0EC86F.1.1",

"temperatureF": "78.8",

"units": "degrees"

},

"message": "threshold violation: temperature is over threshold of 76 for probe temp"

},

"2016-05-02T20:03:18Z":

{"reading": {

"temperatureC": "27.29",

"name": "enclosure temp",

"transducerGUID": "5CCF7F0EC86F.1.2",

"units": "degrees",

"temperatureF": "81.12"

},

"message": "threshold violation: temperature is over threshold of 76 for enclosure temp"

},

...

}

We have now constructed a platform from some generic rules that records threshold violations for the primary types of transducers the ESProto platform accepts.

A more complete system would entail rules that do more than just log the violations, allow for more configuration, and so on.

Spime Design Considerations

The spime framework that we are building is a generalization of the ideas and functionality of that developed for the Fuse connected car platform. At the same time is leverages the learnings of the Squaretag system.

The spime framework will make working with devices like ESProto easier because developers will be able to define a prototype for each device type that defines the channels, rulesets, and initialization events for a new pico. For example, the multi-sensor array could be specified using a prototype such as the following:

{"esproto-msa-16266":

{"channels": {"name": "transducer",

"type": "ESProto"

},

"rulesets": [{"name": "esproto_router.krl",

"rid": "b16x37"

},

{"name": "esproto_device.krl",

"rid": "b16x38"

}

],

"initialization": [{"domain": "esproto",

"type": "reset"

}

]

},

"esproto-temp-2788": {...},

...

}

Given such a prototype, a pico representing the spime for the ESProto multi-sensor array could be created by the following rule action5:

wrangler:new_child() with name = "msa_00" and prototype = "esproto-msa-15266"

Assuming a collection for the hallway already existing, another action would put the newly created child in the collection6:

spimes:add_to_collection("hallway", "msa_00") with

collection_role = "" and

subscriber_role = "esproto_device"

This action would add the spime named "msa_00" to the collection named "hallway" with the proper subscriptions between the device and collection.

Conclusions

This post has discussed the use of picos to create spimes, why spimes are a good organizing idea for the Internet of Things, and demonstrated how they would work using ESProto sensors as a specific example. While the demonstration given here is not sufficient for a complete ESProto system, it is a good foundation and shows the principles that would be necessary to use spimes to build an ESProto platform.

There are several important advantages to the resulting system:

- The resulting system is much easier to set up that creating a backend platform from scratch.

- The use of picos with their actor-model of distributed programming eases the burden associated with programming large collections of indepedent processes.

- The system naturally scales to meet demand.

Notes:

- I believe this is pretty common in the commercial transducer space. Consumer products build a platform and link their devices to it to provide a simple user experience.

- The Squaretag platform was implemented on an earlier version of picos. Squaretag provided metadata about physical objects and was our first experiment with spimes as a model for organizing the Internet of Things.

- I am a big fan of domain-driven design and believe it applies as much to physical objects in the Internet of Things as it does to conceptual objects in other programming domains.

- Ideally, the timestamp would come from the device pico itself to account for message delivery delays and the collection would only supply one if it was missing, or perhaps add a "received" timestamp.

wrangleris the name of the pico operating system, the home of the operations for managing pico lifecycles.spimeswould be the name of the framework for managing spimes. Collections are a spime concept.

Denmark will spend nearly 60 million kroner on a new anti-radicalisation plan, after it was passed through parliament on a near unanimous vote.

Denmark will spend nearly 60 million kroner on a new anti-radicalisation plan, after it was passed through parliament on a near unanimous vote.